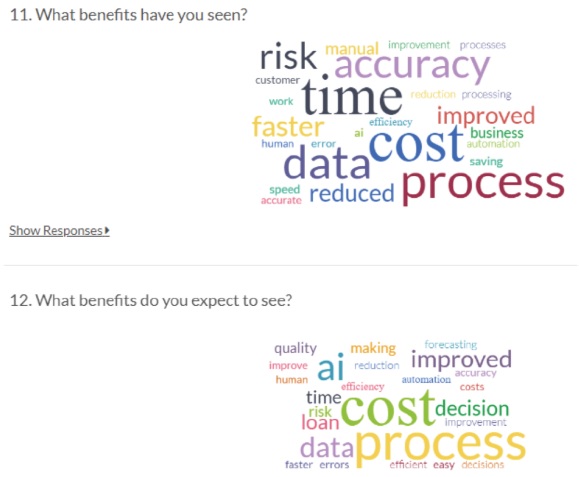

Artificial intelligence can be expensive and tricky to implement. Is it worth the trouble? Two organizations recently decided to pose the question to those who were working in financial institutions.

“Due to budget constraints, a company might not always be able to apply artificial intelligence. But, to those who can, the benefits have become clear,” said Mahdi Amri, Partner and National AI Services Leader, Canada at Omnia, which is the artificial intelligence practice at Deloitte.

On January 24, 2019, Amri was the second of two panellists who discussed early results of a joint survey by SAS and the Global Association of Risk Professionals (GARP). Their webinar was titled Operationalizing AI and Risk in Banking.

He listed the reported four main areas of benefits observed by survey respondents: maximizing revenue, elevating the customer experience, reducing costs and mitigating risk. However, their experience showed implementing AI was not always a seamless transition.

“Make sure the AI strategy is aligned to the corporate strategy,” Amri said. “Machine learning (ML) cannot be achieved on its own. You need to understand the company in order to implement AI strategy.”

His slide summarized five aspects of ML/AI modeling practices in finance:

- Assembling a universe of data

- Variable construction and reduction

- Event generation and reduction

- Journey discovery and analysis

- Building the business case

“You can always use more data, because that will allow you to build more robust models. More data will enhance the level of competency to the organization.”

“Data leads to insight in a shorter time,” Amri said. “AI is a matter of profitability. It decreases the risk, because instead of a small sampling of data, you can use the entire population.” He noted that 44.6 percent of survey respondents were already using AI for credit scoring.

However, certain challenges to AI/ML usage were observed in practice. “Modeling experts typically don’t have business expertise. They don’t understand business requirements, practices and decision-making,” Amri said. “The modellers can use popular open-source libraries but might use them without checking the underlying mechanics of the functions and libraries they use. The field of data science is evolving quickly.”

Changes in modeling lead to some changes in the regulatory outlook. Financial regulators still look for conceptual soundness, validation testing, and model monitoring. However, new considerations have arisen. Chief among these is interpretability. “How can we make sure model validation is understood by all participants?” he asked.

Amri touched on five issues related to transparency and interpretability:

- What is the role of identity?

- How can data flows be monetized?

- How can banks mitigate the technology-governance gap?

- Can transparency be implemented in a systemic fashion?

- Cooperation / collaboration / partnership issues

He then looked in more detail at two “use cases,” the second of which was the proprietary risk management tool, Aladdin, at Blackrock.

“AI adoption is strong and growing,” Amri concluded. “AI can improve the quality of information and consequent risk-reward strategy.” Going forward, “AI needs to focus on the business impact.”♠️

Click here to read about the first panellist, Katherine Taylor.

Click here to view the recorded webinar Operationalizing AI and Risk in Banking.

Credit for photo of William Deloitte: Public Domain, https://commons.wikimedia.org/w/index.php?curid=164872

Credit for thumbnail picture of robot hand: https://thebossmagazine.com/ai-robots/working-robots_attachment/