Artificial intelligence (AI) is being implemented in nearly all sectors of the economy at an increasing rate. A 2019 survey by Gartner showed that 37 percent of organizations had already implemented AI in some form. When it comes to integrating AI into your company, what are the risks? What are the opportunities?

On March 12, 2021, the Industry Relations and Corporate Governance Committee of the CFA Society Toronto convened a panel of experts to look at how effectively a company’s corporate governance can provide proper oversight and avoid mistakes. In other words, “who and how we will mind the bots.”

Three panellists looked at the scope and risks of AI applications, and how the data is stored and used. The speakers touched on many issues: user manipulation, invasion of privacy, and social segmentation. They pointed out regulatory, counterparty, cyber-security, and operational risks. The webinar was moderated by Monique Morden, Founder and Advisor of financial AI firm Judi.ai.

“Machine learning is a branch of AI where we learn from data and can improve the accuracy of predictions,” said Laila Paszti, Partner, Norton Rose Fullbright Canada LLP. She cited an oil & gas company as an example, which has three phases to its machine learning approach: gathering data, building the model, and deployment. “No single piece of legislation covers all three phases.”

Paszti distinguished between “hard regulation,” which involves fines or criminal penalties. One example is in the area of privacy of healthcare data, which can only be used with consent, as is strictly laid out by the Information and Privacy Commissioner of Ontario.

In the case of the American start-up Clearview AI, facial recognition software was developed and the regulators said consent was not present and furthermore, the software was to the detriment of the individuals who providing the data without knowledge. Although the start-up was reprimanded, she noted, “the regulator did not ask Clearview AI to delete the models it developed.”

Other aspects of AI tend to be principles-based, especially in the Canadian jurisdiction. Provincial consumer protection laws say that the consent must be knowingly given. She cited another example, where a customer loyalty package collected information on spending habits “supposedly just to award loyalty points” yet was using the data to build sales prediction algorithms. “This is a misleading use of collected data.”

Also, the consent must be for a certain period of time and not too broadly designated. Another example of wrongful consent was the start-up Ever, Inc. that offered “free cloud storage for your photos” and then turned around and sold the visual images to the military for facial recognition software.

Risk Management

Given the risks of AI, can they be properly managed? “We found six types of risk around AI when we took the human out of the loop,” said Bahar Sateli, Manager in AI and Analytics at the accountancy and consulting firm PwC Canada.

Prediction is never 100 percent accurate, she said, “so we asked ourselves, should we bring a human into the loop when the AI decision has very big stakes in someone’s life, such as whether to hire them or to give them a major loan?”

“Once a model goes into production, it’s like a living thing,” Sateli said. “You need ongoing monitoring—someone must have ownership so they can shut down the process” when AI goes rogue.

At PwC, before AI is approved, the developer must answer two questions: “How does my model work over all?” and “What are the main attributes?” For example, is postal code used as a proxy for household income?

“We may go with the less powerful model but it’s worth it, if it’s more explainable” to regulators, corporate governance experts, and other stakeholders, Sateli said.

Investor / Board Perspective

Investors have been thinking about AI and corporate governance for a long time “through the lens of privacy,” said Christine Chow, Director, Global Tech Lead of Federated Hermes.

In May 2018 a landmark European law came into force: General Data Protection Regulation (GDPR).

Companies seeking to use personal data must put in place appropriate technical and organizational measures to protect the data. Business processes that handle personal data must use pseudonyms or full anonymity. Data controllers must design information systems with privacy front of mind. For instance, datasets cannot be used to identify an individual.

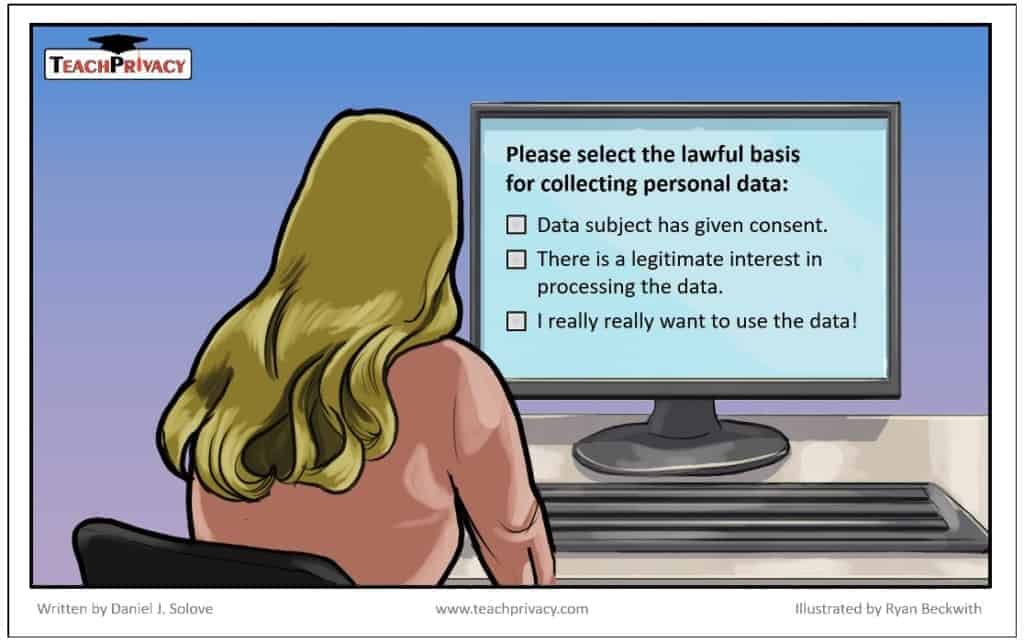

There are, however, six lawful exceptions where the privacy law does not apply: consent, contract, public task, vital interest, legitimate interest, or legal requirement. Even so, after consent is given, there are limits—and the data subject (the person) has the right to revoke it at any time.

“General counsel at a firm must make sure that any algorithm used does not contravene privacy regulations,” Chow said.

She gave an example of AI and good corporate governance. Liberty Mutual used a third-party chatbot to talk to customers. (This approach has been emulated by other insurance companies.) The company became aware of a potential hiccup: the chatbot developed in one country was biased to understand the home country’s accent and vocabulary and slang. Over time, machine learning corrected this bias.

The rule of thumb is that the objective of the AI use “must be narrow,” Chow said. The problem it addresses must be well-defined and bounded.

Companies seeking to leverage third-party AI must make sure the source company did due diligence as it created the AI app or algorithm. She recommended the online Trusted AI Assessment document as a way to engage with companies. ♠️

Click here to view the Trusted AI Assessment document: Assessment List for Trustworthy Artificial Intelligence (ALTAI) for self-assessment | Shaping Europe’s digital future (europa.eu)

The image for facial recognition is from the LA Times website. Permission pending.

The final image is from TeachPrivacy.com. Permission pending.